AI is one of the biggest buzzwords in the technology world – and the field of 3D modeling is no exception. As large language models (LLMs) such as ChatGPT, Perplexity, and Gemini continue to take off, this has got many people thinking ‘can AI make 3D models?’

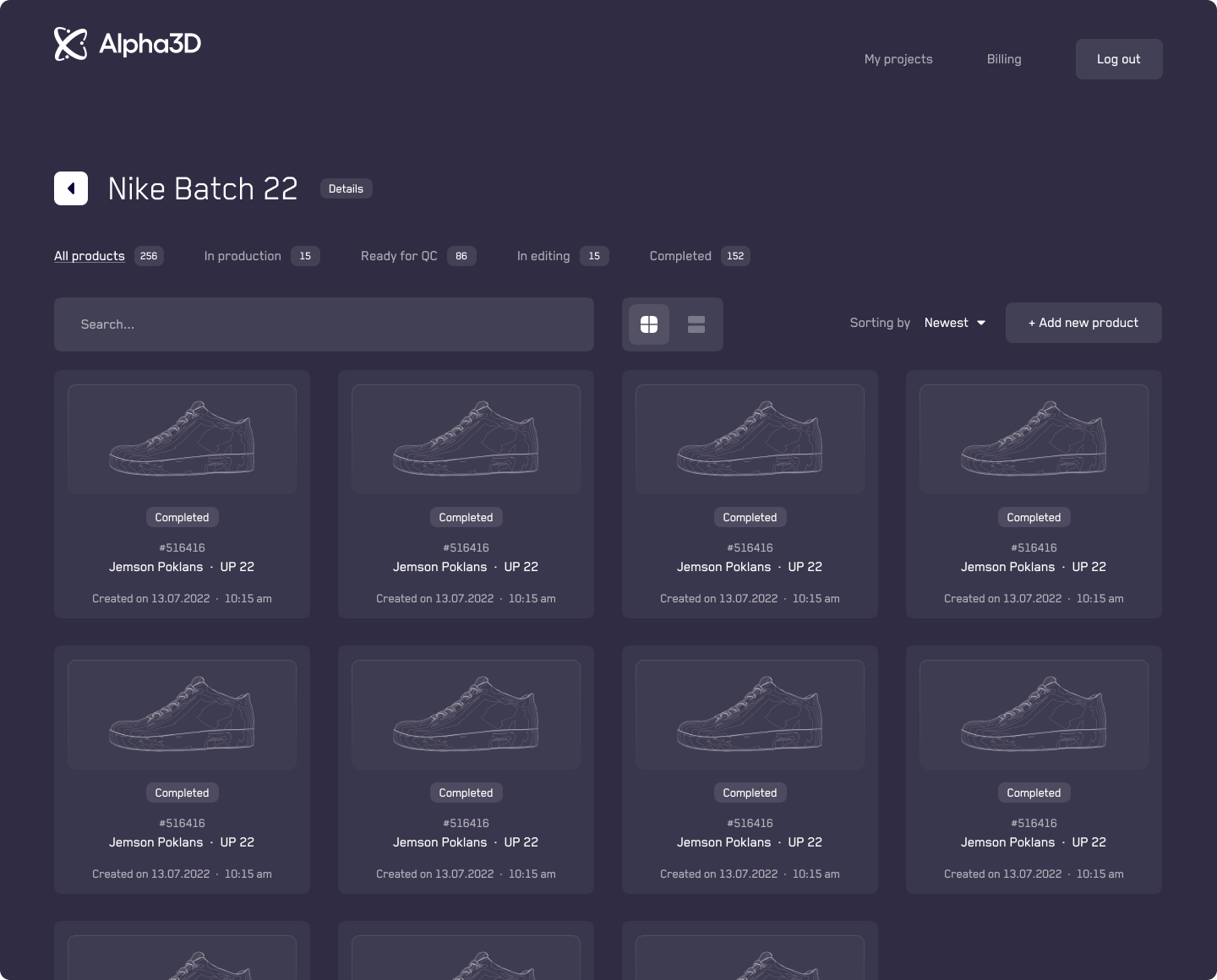

As a matter of fact, you can! From entry-level 3D modelers like Alpha3D and Hyper3D to the industrial 3D Foundry Labs, there are now plenty of AI 3D model generators on the market.

However, it’s also generally accepted that these early text-to-model platforms still need a little refinement. So, which is the best AI for 3D modeling? And just how complex a model can you generate? To find out, 3D Mag spoke to the emerging industry’s innovators, who each offered surprisingly different takes on where the technology will head in the near future.

AI for 3D modeling: How does it work?

Generating a 3D model based on a text prompt is incredibly complicated. It involves multiple AI models working together to interpret inputs and generate 3D representations.

Essentially, this means using Natural Language Processing (NLP) to gain an understanding of the shape, texture, and spatial relationships of a desired model. Some platforms then create a 2D image based on this prompt using popular tools like OpenAI’s DALL-E.

Through numerous AI-powered methods, including Generative Adversarial Networks (GANs), diffusion models, and gaussian splatting, these inputs are used to predict a rough 3D shape. In cases where meshes are generated from the process, these can later be refined, textured, and optimized for real-world use, automatically without any direct user interference.

The potential benefits of automating 3D modeling are clear. Even generating a mesh for further iteration can save designers hours of work. Depending on their field, this could mean bringing 3D assets to screen faster, or getting a physical product design finalized at pace.

Where are AI-generated 3D models used?

While heavy-hitters like Google and NVIDIA are developing their own text-to-model software – DreamFusion and GET3D – we’ve opted to find out how such platforms are being used, by speaking to those who have already gone to market.

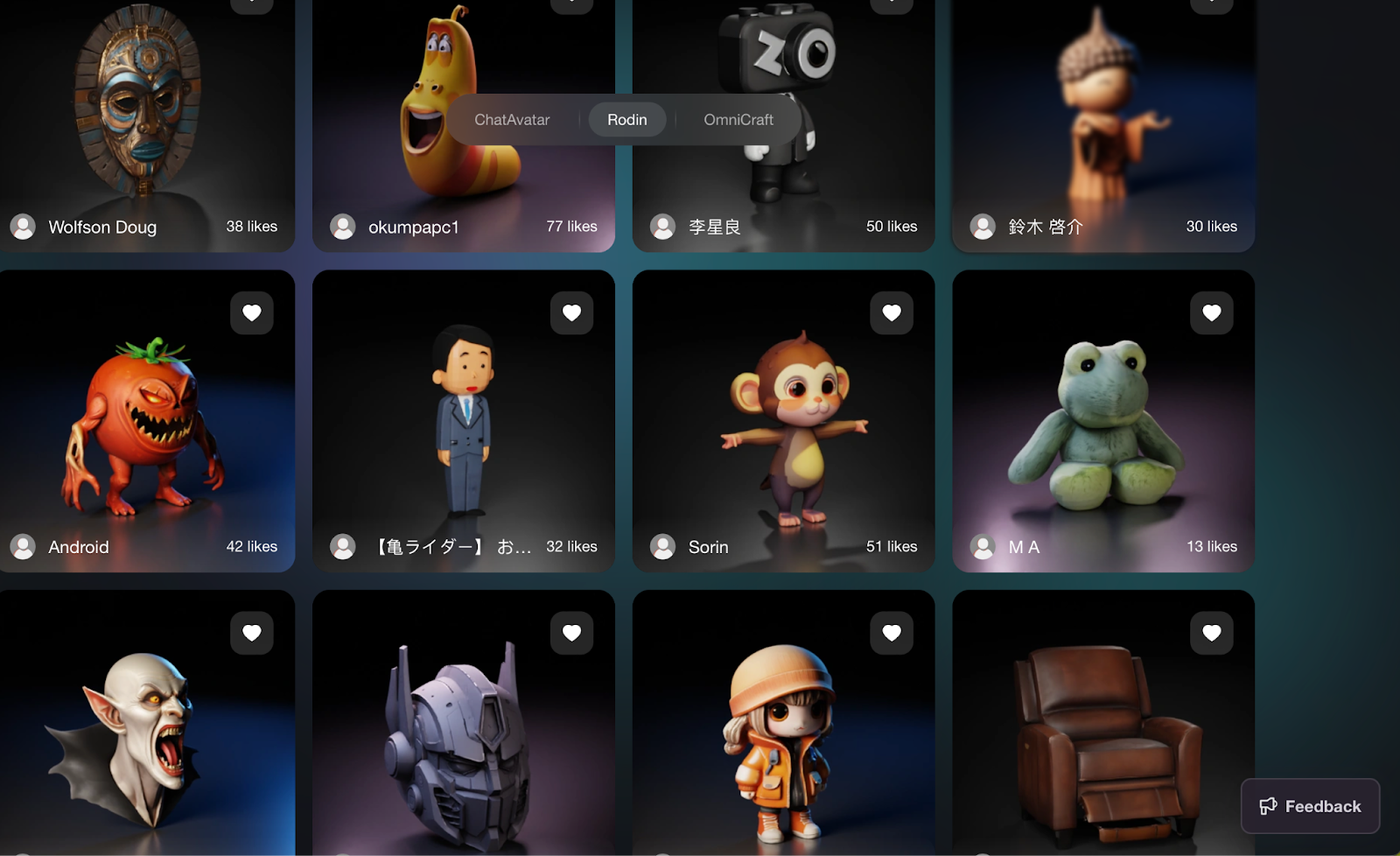

Alpha3D, for example, boasts that its platform already makes it possible to generate models for AR/VR, e-commerce, CGI, video games, 3D printing, and more. Hyper3D makes similar claims about its Rodin algorithm, and its website is full of AI 3D models to support this.

However, Jacob Si, the platform’s growth manager, admits there are certain areas not yet open to its 3D assets.

There are quite a lot of interesting ways you can use these models. Let’s say you’re an e-commerce seller. You could roll-out an AR initiative where buyers ‘place furniture in their homes’ through a camera. But if we’re talking about making a specific component with fine details for manufacturing, I don’t think that’s where generative AI will shine.

Jacob Si

This view is echoed at 3D Foundry Labs, Eliran Dahan and Tal Kenig’s successor firm to the popular AI 3D model generator 3DFY.ai. While their initial platform captured the imagination of makers and designers, they’ve now changed course to launch a service offering aimed at addressing the needs of more industrial clientele, using all relevant technologies.

“One of the most common responses from [3DFY] clients was ‘it’s nice, but I can’t really use it in my specific use case.’ We came to the conclusion that the technology isn’t mature enough,” said Dahan. “We now have a multidisciplinary team that evaluates existing technologies to come up with a solution. It’s totally different to what we’ve done before, we constantly have to be at the forefront, staying up-to-date with all related products and research papers.”

“We realized that one size doesn’t fit all. In fact, one size fit’s almost none.”

Eliran Dahan

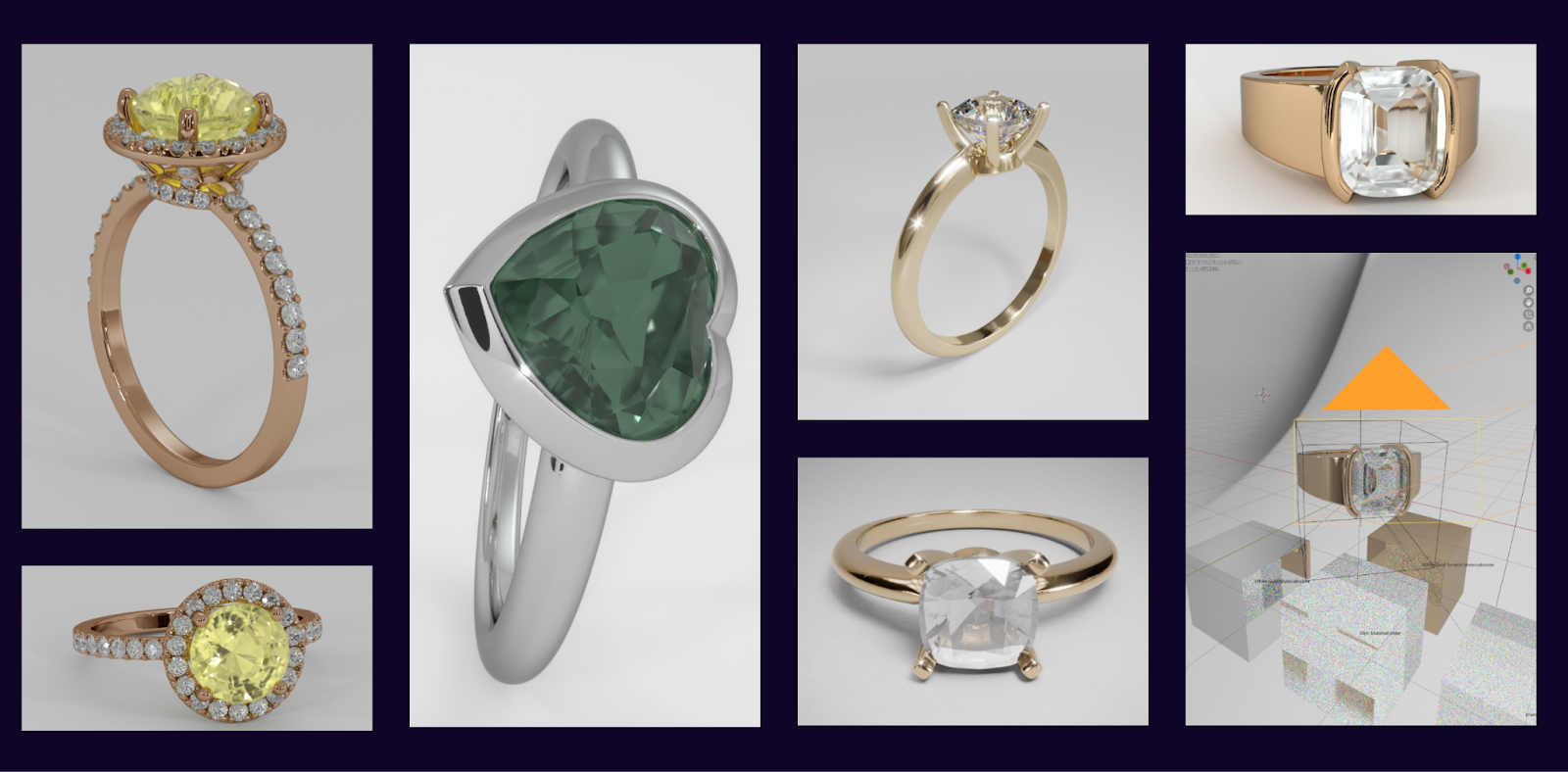

3D Foundry Labs’ fresh approach has broadened its horizons – it now works with customers to accelerate everything from graphics rendering pipelines to e-commerce modeling. For example, one of its clients in the jewelry industry would previously generate 3D models so customers could preview orders by hand. This hours-long process takes minutes with AI.

How detailed are 3D models generated by AI?

The AI-generated models already available online speak for themselves. Current technologies allow for the rapid, automatic generation of some pretty realistic looking AI 3D models. Of course, many are inspired by real-world items (and copyright is perhaps a different discussion), but AI seems to have no problem at all with authentic shape and texture reconstruction.

Where results seem to be lacking is geometrically accurate data capture, but Alpha3D’s COO and Co-founder, Rait-Eino Laarman, says they provide a solid basis for modeling.

For 3D printing or reverse engineering, our models provide a solid starting point that can be refined manually using specialized software to meet precise requirements. While they offer good foundations, additional manual edits are typically necessary to ensure the detailed accuracy needed for physical manufacturing or engineering purposes.

Rait-Eino Laarman

By contrast, Si admits that accuracy can be an issue, especially compared to photogrammetry or 3D scan-based models, where users “have the luxury of capturing from 360 degrees.” As his platform “leaves this to AI’s imagination,” it occasionally affects reconstruction. On geometry capture, professional 3D scanning trumps both AI & photogrammetry, but we’ll go into that later.

With regards to detail capture, Dahan & Kenig go even further in their criticism of AI-generated models, describing them as “not super-detailed” and having “very few real-life use cases.”

“First of all, they’re not really an accurate representation,” claimed Kenig. “They’re not very detailed and the same goes for textures, only a few models can achieve realistic PBR textures. If a technical artist makes a 3D model, they also divide it into parts. Nowadays, AI models are single-piece meshes, some of which can be broken down, but not into meaningful parts.”

What’s the future of AI-powered 3D model generation?

Interestingly, each of our interviewees had slightly different takes on where AI will be used in 3D modeling next. Of course, professional 3D scanners like Artec Leo & Eva already harness AI to capture the fine surface details in high-definition, but they say there’s room for development.

While Laarmann sees his platform evolving into an “AI-powered hub for 3D creation” that could expand into areas like manufacturing, Si says Hyper3D is targeting 3D printing, and working with Bambu Lab to create accessible AI 3D models on Maker World.

Kenig, on the other hand, believes that existing LLMs will one day be deployed to accelerate the functions ordinarily carried out by technicians during design, including sketching and texturing.

There are plugins for Blender and maybe Unity, where you can tell LLMs to create like 100 different spheres, stuff like that. Maybe the next-gen will see someone really fine-tune this – perhaps taking a library of Python scripts to Blender (which has a great API). But I think progress will come from AI learning to operate these tools in an advanced manner.

Tal Kenig

Whether or not these predictions come true, only time will tell. For now, it appears that 3D models generated by AI are relatively limited in scope, especially when it comes to reverse engineering. Generally, photogrammetry delivers greater visual detail and 3D scanning is head and shoulders above both for accurate data capture – hitting heights of just a few microns.

With bleeding-edge technologies like AI Photogrammetry, it’s not like AI is entirely absent from other 3D modeling techniques either. Still, it’ll be exciting to see how text-to-model platforms progress in the near future, as the hype around AI continues to swirl in every direction.