Update 7 June 2017

itSeez3D just released version 1.0 of their Avatar Web API and a beta plugin for Unity.

A while ago I wrote that itSeez3D announced a new Software Development Kit (SDK) — called Game Avatar — that would allow users to make a 3D scan of their face by taking just a single, regular photo with the front-facing camera of their smartphone.

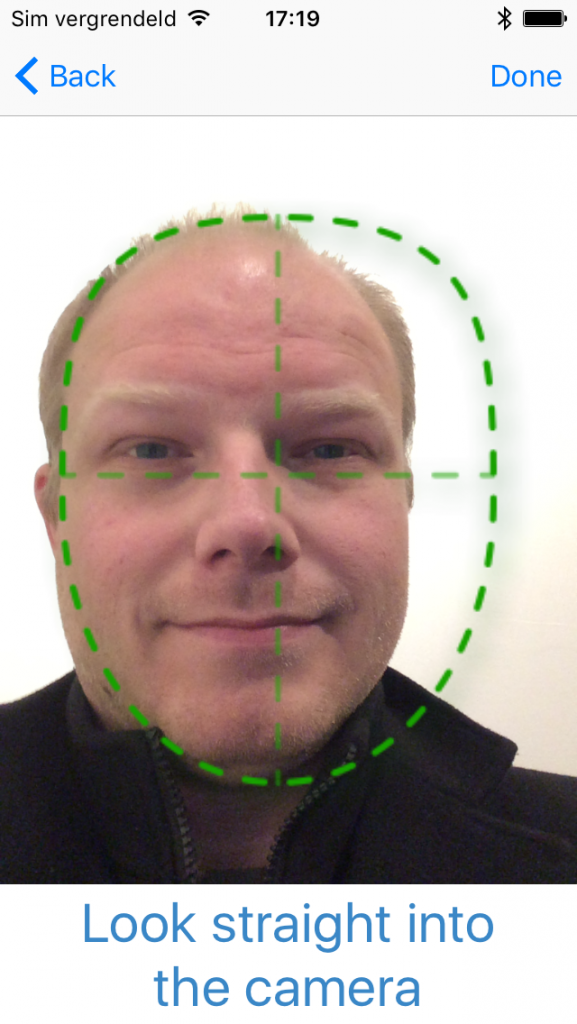

Recently, they released a sample app for iOS that shows how this technology works. Naturally, I had to test it.

The Game Avatar app is very basic, but that shows just how easy it is. Just open the app and take a selfie, wait a minuted while it’s processing and… bam… you’re 3D

In this demo, it allows you to change your hairstyle. This is actually a necessity, because it processes just your face — not your actual hair because it can’t see(z) that.

How does it work?

As you might know, it’s impossible to capture actual 3D data with just a single photograph. That simply doesn’t have any depth information. Unlike a smartphone with a depth sensor like the recent Tango phones.

So this technology uses AI algorithms to estimate how you look in 3D, based on your photo. This will probably never be as accurate as 3D scanning or Photogrammetry. But it’s very fast, very easy and might enough for the intended purpose of quickly creating a digital representation of yourself — an avatar — for use in (mobile) 3D games and Augmented- and Virtual Reality applications.

The calculations are done in the cloud, which makes it very fast. And because of the small file sizes of a single photo and a relatively low-poly model, upload and download are fast as well. From photo to 3D model, it took about a minute.

Try itSeez3D Professional for Structure Sensor

One Month for FREE

ItSeez3D also makes a great iPad app to use with the Structure Sensor.

Including 3-full featured exports to PLY, OBJ or WRL format with all 3D Printing options like scaling, hollowing and pedestal generation! An exclusive offer worth $15!

What can I do with it?

The idea is that developers can use the Game Avatar SDK (apply for beta here) to integrate this technology into their own apps and games. But the demo app is useful as well, because it not only saves all captures and also let’s you export the 3D model — including the chosen hair style.

The format is a .PLY 3D model and a .PNG texture file packed in a .ZIP container. Uploading the .ZIP directly to Sketchfab somehow messed up the texture. But as you can see below, that shows perfectly where the actual photograph ends and the generated texture begins:

I fixed the texture issue by opening the .PLY in the free MeshLab program, exporting it as .OBJ, zipping the .OBJ, .MTL and .PNG and re-uploading that to Sketchfab:

The result uncanny. It’s clearly my face, but pasted on top of a bald guy’s head. The added texture is clearly from an actual person — it even has some blood on the top from a scratch or shaving accident! I guess it chooses this texture based on skin tone. The only other person I tested so far was my business partner Patrick — who’s also a caucasian male — and he got the same texture:

But as you can see, they’re not the same 3D model. If you put both embeds above in MatCap render mode (it’s under the gear icon) you can see that the AI estimated that the shape of my head and nose are different than Patrick. Talking about noses: it’s good to take the picture from a slightly low angle so your nostrils are visible. Otherwise you won’t have any, like Patrick.

So it’s fast and fun and you could finally see what I will look like totally bald (preparing me for the future…) or make myself look a bit more like Ed Sheeran:

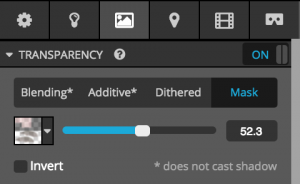

Quick Tip: If you want the artificial hair to display correctly on Sketchfab, go to Materials > Transparency. Select Mask and then choose the only texture available and use the slider to set the level of transparency to your liking.

A nice detail: The export is done through email and also includes an animated GIF. Nice for Twitter and Tumblr! However, one of the GIFs I got was just over 5MB, which is over the file size limit of those social networks. I reduced the resolution and amount of colors in Photoshop to make it just 918KB:

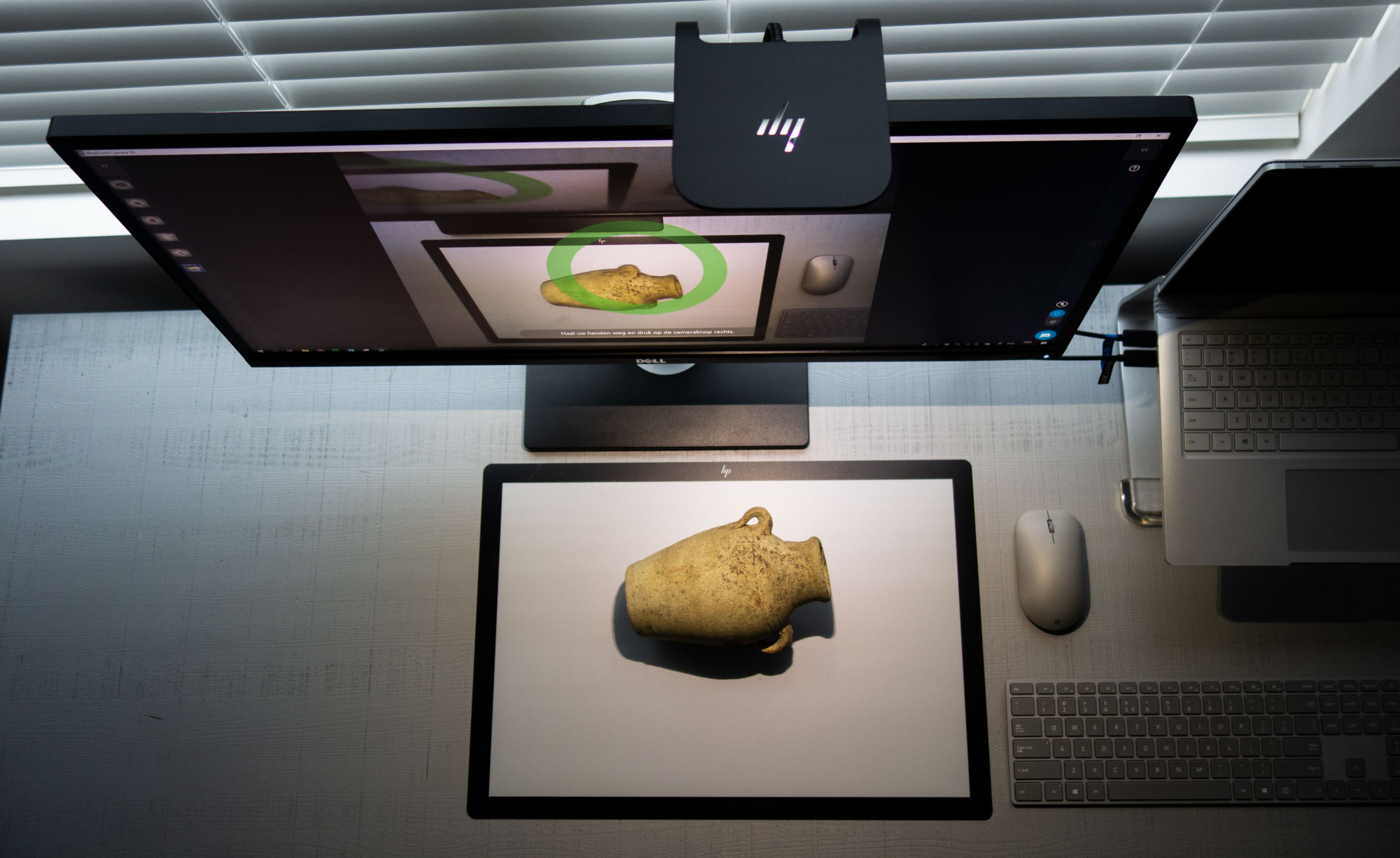

How good is it compared to a “real” 3D Scan?

That’s a tricky question, because it depends on what you compare it too. So I chose to simply compare it to a scan made with the itSeez3D iPad app for Structure Sensor.

As you can see the algorithm estimated the shape of my mouth, nose and eyes rather good. Even the ears somewhat resemble mine. From the front it certainly looks like me. Of crouse, the fact that I don’t have much hair helps a lot in this case.

In Patrick’s case, the resemblance is almost non-existent:

Sure, his hair makes a lot of difference and he shaved since the Structure Scans, but the shape of his eye sockets and ears are off.

This really shows how the results depend on the subject — and how the human brain is hard to fool when it comes to recognizing people. Of course, if you would put these heads in a space suit to feature them in a game, you probably won’t notice.

I’m definitely going to test this on non-caucasian-male subjects and will update this post accordingly. But for now I must say I’m impressed by how fast and fun this way of 3D capture is. The threshold doesn’t get any lower than — consumers can just use the phone they have — and AI algorithms like this will get better over time.

This might just make 3D scanning mainstream!

thanks for share, helpful.