A while ago I wrote a post stating that The Future of Mobile 3D Scanning is Software but now is a good time to ease that statement a bit. It’s no secret that the TrueDepth Camera on the iPhone X is not only handy for logging into the phone using Face ID but it can also be used to make 3D scans. Bellus3D and other apps have demonstrated that this works very well indeed and Apple is expected to put these capabilities in all upcoming 2018 iPhone models.

There have also been rumors on the Android front but yesterday The Verge reported that smartphone maker Vivo has revealed actual plans to put front-facing depth sensors in their phones. And that it will offer a higher resolution of 300,000 sensor points — 10 times the resolution of the current iPhone X sensor. The resolution might not matter to people that just want to use it for facial recognition but of course it does matter for broader 3D scanning purposes.

Vivo will use Time of Flight (TOF) sensors for this feature and although The Verge puts that technology under the umbrella of Structured Light Scanning (SLS) they are two totally different approached to active 3D capture, or 3D scanning. It doesn’t matter that much for consumers but if you’re curious you should really read this post on Gamasutra that explains the difference by comparing the Kinect 1.0 to the Kinect 2.0 depth sensors that make use of SLS and TOF respectively.

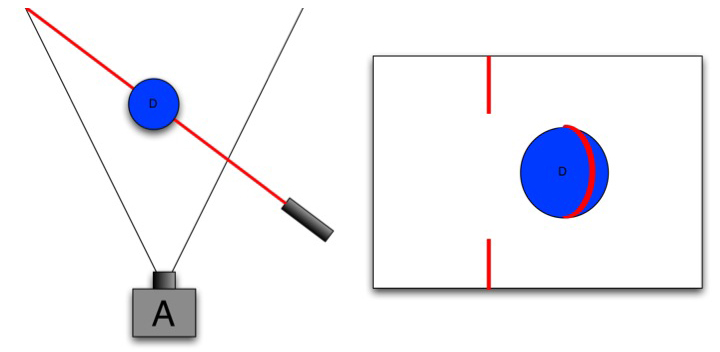

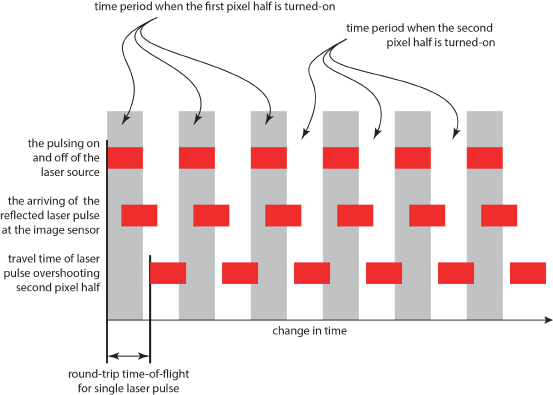

Structured Light Scanning

Time of Flight

I’m not sure who which manufacturer will supply the TOF sensors to Vivo (or other Android phone makers) but I do know that PMD Technologies supplied their sensors for the Google Tango phones. Which where phones that, in contrast to current front-facing implementations, used back-facing sensors for AR tracking and mapping purposes. That project failed because it was surpassed by the software-only ARCore for Android (similar to ARKit for iOS). Which is not to say that rear-facing sensors will never return, since Apple is rumored to embed those into iPhones in 2019.

Vivo claims their TOF sensor can perform 3D mapping up to 3 meters, which seems a bit overkill for a front-facing sensor. So I totally expect these sensors to be embedded in the back of Android phones as well at some point in the future. Which would be an awesome revolution for the democratization of 3D scanning!

Getting back to Vivo’s front-facing sensor plans, they haven’t given any kind of release schedule for this feature. But 3D scanning will reach the masses in two to three years no matter what and that makes yours truly very happy.

So the future of mobile 3D capture might contain active scanning hardware after all. Either way a lot of technology will be released that needs to be reviewed thoroughly — I’m ready!

If you want to be updated on future developments of mobile 3D scanning, please follow me on Facebook or LinkedIn.

Hello! I found your post very informative. Do you know if we can access the TOF sensor data? I’m considering on using a smartphone with TOF but I’m not sure if they keep the sensor data for their own applications or if the sensor values are accessible for developers.

hi anna, do you have a news regarding TOF data

* hi Anna, did you have a news regarding TOF data?

Thanks a lot for this awesome content. I have read it thoroughly and learned a lot from this. Hope to see more content like this soon.

After hundreds of remodels and two decades of building in San Diego, the team

at MBK came together to create an experience for homeowners that’s better than a typical construction company.