It has been a while since I covered depth sensors for 3D scanning because there haven’t been interesting new ones for a while. When people ask what sensor to buy to take their first steps into the world of 3D scanning, I usually advice a Structure Sensor (Review) for iPad owners and and a 3D Systems Sense 2 (Review) — which features an Intel RealSense SR300 sensor — to people that want to use a Windows 10 laptop or tablet.

I always stress that the sensor itself is just 50% of the scanning experience. The software is the other half. The Sense 2 comes with its own well-designed Sense for RealSense software and for the Structure Sensor I advice either itSeez3D or Skanect, depending on the use case.

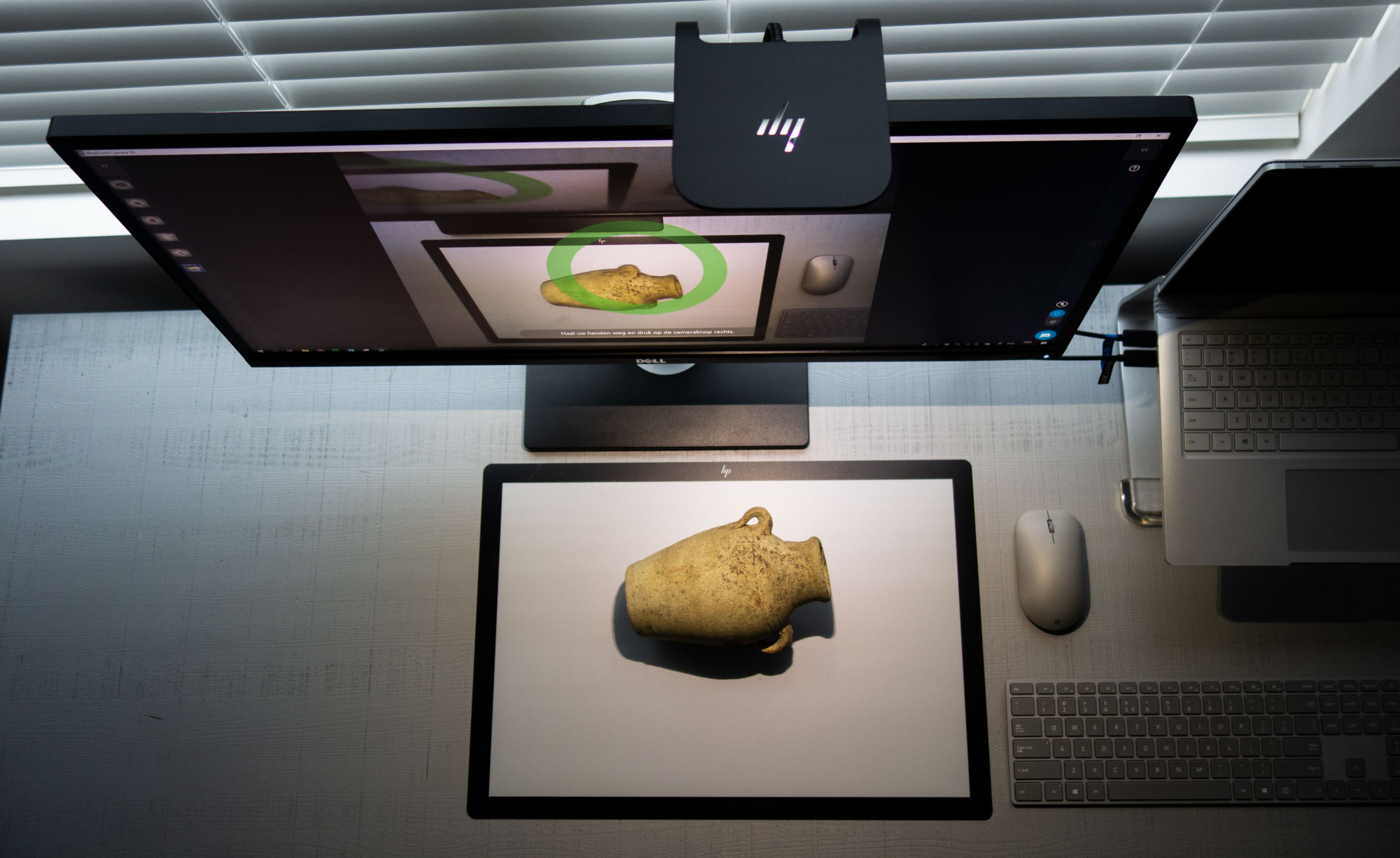

But the SR300 has been replaced by Intel’s latest RealSense D400 series of depth sensors and I’m getting a lot of questions about it in relation to 3D scanning. Also, I see a big rise in popularity of the Astra line-up from Orbbec, which is also HP’s sensor supplier of choice for the Sprout Pro G2 all-in-one computer (Review) and recently introduced HP Z Camera (which I’m currently beta-testing and will review soon). And of course, a lot of people are Googling for Microsoft Kinect alternatives and these sensors are all contenders for that.

Hardware

So for this review I’ve tested both the $172.55 Intel RealSense D415 and $159.99 Orbbec Astra S sensors to see how they perform for 3D scanning small to medium objects. And as a benchmark, I’ve put these sensors up the RealSense SR300 which I have been using for almost two years now — both in the form of the 3D Systems Sense 2 and the more bare-bone (dev-kit) version in the form $149.99 Creative BlasterX Senz3D.

There are a few things that are noteworthy before I continue:

- The SR300 and Astra S are both short-range sensors (hence the model names). The D415 has a longer range but it does start at a very nearby distance which makes it better suitable for object scanning than long-range sensors like the RealSense R200 and the Orbbec Astra (non-S) which hav a minimal scanning distance of 0.5m and 0.6m respectively.

- There’s also a RealSense D435 model which has a larger field of view due to wide-angle lenses and a global shutter system for all cameras. Both features have benefits for object or human tracking use-cases that either have to cover a large area or have fast-moving subjects, but there are no benefits for (slowly) scanning small to medium objects or people.

- The Astra S’ higher resolution modes only work at very slow frame rates, making them less suitable for handheld scanning (turntable scanning does work). More about that later.

- The Astra S is a lot larger than the other sensors, putting the projector and IR-sensor further apart. This can make it harder to scan hard-to-reach areas that are obstructed by other parts of an object.

Finally, it’s good to know that all three sensors have standard tripod threads but the SR300 is impossible to mount on the plates of most large tripod because the thread isn’t located at the lowest point of the device.A reader noted in the comments that even though my Astra S does have a standard 1/4″-20TPI tripod thread, recent models have an M6x7.2mm thread that might not fit the tripods you have (mine all have 1/4″ screws). The RealSense models all have a single 1/4-20 tripod threads on top of that the D400-series have two M3 threads on the back.

Software

To test these devices properly I needed software that supported all of them. The only commercial software that I know of that does so is RecFusion. I’m using the $99 single-sensor version for this review but it’s good to know that there’s also a $499 multi-sensor version. With that, you can create a calibrated rig with multiple sensors around a subjects so you can minimize or eliminate movement and increase scanning speed.

I’ll do a full review of RecFusion soon in which I will also cover its editing features. But in short the big benefit of the software is that it supports so many sensors and let you choose all available depth and color resolutions independently (although not all resolutions or combinations of resolutions seem to work).

Note about Texture Quality

A downside of RecFusion that greatly impacts this review is how it captures and handles color data. Unlike the recent version of Skanect that has a mode that can capture color frames independently of depth data and map these images as textures onto the geometry later, RecFusion strictly uses per-vertex coloring internally. It can export a UV texture map in some file formats but that will be generated from the per-vertex colors, not from external image files. This means texture quality isn’t on par with other software in my opinion, so I’m forced to mainly focus on geometric scan quality for this review.

Test Setup

Although all sensors can be used hand-held, I’ve opted to mount them on a Manfrotto Action Tripod and use a simple IKEA SNUDDA lazy susan. This way I can make sure that the scan results are not influenced by human variables such as hand motion. Because I’ve scanned from a single static angle, this means that the resulting models will contain holes. However, the goal of this test is not to make complete scans but rather look at the relative results between sensors.

As you can see I’m using two softboxes with continuous studio lights but that’s strictly for better texture quality (which isn’t RecFusion’s strong point). Depth capture is not influenced by artificial lights at all — depth sensors can even “see” depth in complete darkness. As a side note: the SR300 and Astra S don’t like sunlight from nearby windows, while the D415 is designed to also function outdoors. I haven’t tested that yet, tough.

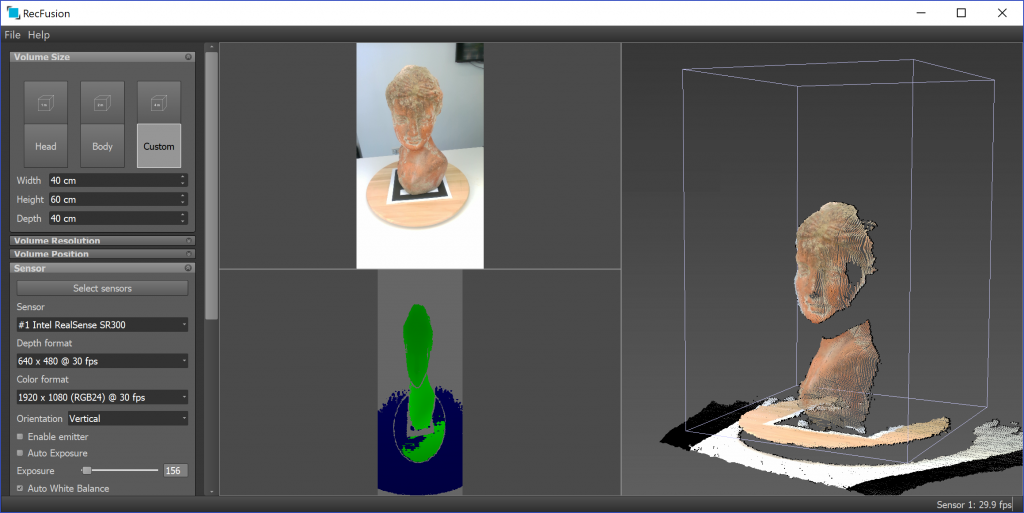

It is nice that RecFusion let’s you set the sensor orientation to vertical. For tall objects, this is often preferable because the sensors image size is rectangular. Here is what the color and depth feeds look like, and how that translates to a realtime 3D point cloud:

The default settings for the SR300 (above is a still frame) are pretty good. And because the range stops at 2 meters I had no problems with the back wall of my office. The depth image might not be just VGA resolution but it’s very stable and almost without noise. This is a different story with the D415’s depth map (below is an animated GIF) that has a lot of moving noise — regardless of the resolution:

In my tests, I found that the severe noise impacted both the tracking during scanning as well as the scan quality. It did help to set the depth-cutoff to 1 meter (since the sensor can sense up to 10 meters) even though the 3D image is only formed withing the bounding volume.

Note about D400 Results

After publishing this post, the CTO of Intel RealSense commented with tips on how to improve the D400 series noise levels and scanning results. I’ll dive into this and update the post accordingly if results indeed improve.

With the Astra S, RecFusion displayed the high res color and depth modes as 30fps while the specs state 5-7fps.

But if you look closely at the bottom-right corner you can see the actual combined framerate drop to 3fps with the highest depth resolution selected. That’s very low even for turntable scanning. That might be a limitation of the Orbbec sensor’s USB 2.0 connection vs. USB 3.0 on the RealSense devices. Somehow the highest color resolution streamed only colored static.

Results

Note about D400 Results

After publishing this post, the CTO of Intel RealSense commented with tips on how to improve the D400 series noise levels and scanning results. I’ll dive into this and update the post accordingly if results indeed improve.

But now let look at some results! What you see in the Sketchfab embed below are (from left to right): the SR300 result with the 640×480 depth resolution; the D415 result in both 640×480 and 1280×720 resolutionl and the Astra S result in 640×480 and 1280×1024 resolution.

Note: All models were captured with RecFusion’s Volume Resolution set to 512×512 which translate to a “voxel size” of 2mm, meaning it won’t capture details smaller than that. Theoretically these sensors have a higher depth resolution than that at close range (the resolution is distance-dependent). For instance, Intel states that the D400 series is less than 1% of the distance, meaning ~1mm at 1m. But setting any higher resolution proved too much for my Surface Book with GTX965 and 2GB of VRAM. RecFusion simply gave an error that it wouldn’t go any finer — at least in real-time*.

*Note about Real-Time & Offline Reconstruction

After publishing this post I continued doing my full review of RecFusion (out soon!) and have discovered on the website that it also supports offline reconstruction on the CPU besides real-time reconstruction on the GPU. However, I’ve not been able to find the Compute Unit panel described on the tutorial page that should let you choose CPU or GPU mode. I’ve contacted the developer for tips on this and will update the post accordingly. I’m specifically curious to the improvement of color resolution when I max out the voxel resolution.

To start, let’s quickly take a look at the colors. From a distance they’re all okay’ish but zoom in and they’re blurry. Since RecFusion does per-vertex coloring, increasing the Volume Resolution can possibly result in slightly better textures but I can’t say how significant this is. Also, a higher resolution would be overkill for the 640×480 depth setting so you’ll just end up with an unnecessary high polycount.

Hit 3 on your keyboard to view the embed in MatCap mode without the textures. The SR300 made the bust a bit chubby somehow but overal the geometry is very clean (again, the holes are because of the single-angle scanning from a tripod).

The “low-res” D415 result seems to reveal that the sensor uses circular structured light patterns or something like that since there are circular deformations on the surface. Also there’s not a lot of definition, possibly because of normalized noise. This moving noise has been smoothed out on the actual surface because the software fuses image data over time but it’s still visible on top of the model. The “high-res” version has slightly more detail (check the eyes). Possibly this could have been more with a higher Volume Resolution setting but I doubt it will be significant because of the severe noise.

The Astra S results have a few errors but are overal smooth and even the “low-res” model contains more definition than the SR300 model with the same 640×480 depth resolution. The “high-res” model is a bit more defined but also contains a bit more surface noise. And since scanning was done at 3fps this was also the slowest scanning session of all.

Next up is a scan of a full-scale foam head. I chose this object because it doesn’t have so many details and a very smooth surface, making it harder to track (especially the back) and easier to spot noise on. For this comparison I’ve scanned with all 3 sensors at 640×480 resolution. I discarded color altogether since the foam head is all white anyway.

In this comparison it becomes very clear how the noise (which is probably even exaggerated by the white surface) on the D415 influences both the tracking and capture quality — it’s horrible! The face is completely different than the real thing and if you look at the back you can see that it didn’t capture the small indent in the skull which the other two sensors had no problem with.

Comparing the results from the two short-range sensors, you can see that they’re very similar in details but the Astra S scan is smoother and has less deformations.

To wrap up, a scan of a 30cm tall old jar with all three sensors:

By the way, the color quality in terms of hue, saturation and brightness can be easily controlled in RecFusion — both at the capture stage and afterwards — but I simply didn’t pay any attention to that here. Also, the deformations on the Astra S scan are probably because its projector and sensor are further apart compared to the RealSense arrays which becomes problematic when scanning smaller objects, especially from a fixed angle.

Although an object this size is only just within the comfort zone for a sensor like this, it’s clear that the D415 has most noise and the SR300 and Astra S surfaces are similar, with the latter having the edge on overal smoothness.

Conclusion

First, let me repeat that I cannot judge color capture quality for texturing with the native RGB cameras in this test because of the per-vertex nature of RecFusion. In general, both RealSense sensors should give better texture quality with their Full HD (~2 megapixel) RGB cameras while the Orbbec Astra S can only capture textures at VGA (~0.3 megapixel) resolution, at least at a decent framerate for 3D scanning. But even a Full HD camera won’t cut it for creating quality assets for purposes like asset creation for game and VR/AR development or mobile real-time 3D applications. That’s why HP added an external 14.2 megapixel RGB camera to the Astra S sensor array for it’s Sprout G2 (Review) and Z Camera (Review soon!) products combined with software that can perform asynchronous UV-texture mapping.

Need Tailored Advice?

Want to know which depth sensor is best for your use-case — or if a depth sensor is the best technology for that in the first place?

I consult for individuals and startups as well as large Fortune 50 corporations, so don’t hesitate to send me an email with your 3D scanning challenges.

But maybe you don’t care about color at all! Then it’s clear that Intel’s latest D400-series depth sensors, or at least the D415 (but I strongly believe the D435 performs similar) is not a step forward for 3D scanning compared to the SR300 short-range sensor of the previous generation. I don’t know if it can be fixed with software but the severe noise levels on my D415 make it unacceptable to use for 3D scanning both in terms of tracking and actual geometry capture.

Note about D400 Results

After publishing this post, the CTO of Intel RealSense commented with tips on how to improve the D400 series noise levels and scanning results. I’ll dive into this and update the post accordingly if results indeed improve.

That is, of course, for the small objects I’m testing with in this post. The D415 can scan up to a whopping 10 meters, which I’ll test soon. At a larger distance and for larger objects or interiors, the noise levels might be more acceptable.

For objects, my advice would be to buy an SR300 if color capture is important to you, or buy an Astra S if you care more about smooth surfaces in the geometry. Or you could wait for the HP Z Camera to come out, since it features a secondary high-res RGB camera and software for quality UV-texture mapping, but you’ll have to wait for my review to know if that combination works out — and need to save a bit more since pricing will “start at $599” according to HP.

Next up I’ll do a full review of RecFusion and its editing features. Follow me on LinkedIn, Facebook or Instagram and be the first to know when that post is live!

* At least in my country, the M6 screw for the Astra tripod thread is not standard for photography equipment (although it is well-known in automobile/bikes spare part stores)

* Could the 91.2º FOV from the OV9282 imager (used in the D435 model) be really considered as a fisheye lens? Please correct me if not, but an angle considered as for fisheye would be 166º diagonal or 133º horizontal (from the ZR300 development model)

As far as I know there are two tripod thread sizes: 3/8″ (large) and 1/4″ (small) and all these sensors have the small variant. That’s also on all of my digital cameras.

True, that’s not a fish eye. I changed the text to wide angle.

Before I had ordered the Astra, I was warned about this M6 particularity: an official answer from someone at Orbbec (https://3dclub.orbbec3d.com/t/i-would-like-to-know-the-kind-of-the-screw-hole-of-the-astropro/1319/4 ) and a loud complaint from an Amazon customer (https://www.amazon.com/Orbbec-Astra-Pro-3D-Camera/product-reviews/B0748LHXTX/ref=cm_cr_dp_d_show_all_btm?ie=UTF8&reviewerType=all_reviews , the one dated Feb 24/2018 with 3 photos).

Later, when my camera was arrived, I could confirm that the 1/4 size tripod does not fit, but M6 does (Will this M6 size be very popular in the domestic chinese electronics market? o.O ). According with Nick’s answer above, it is possible that some production batches (the older ones, I guess) came with the M6 hole, and some newer batches, at the request of many customers abroad, were built with 1/4.

By last, thank you Nick for your reviews and work behind it. Greetings.

It’s interesting that there are apparently different batches of the sensor with different tripod mount screws. I guess I’m lucky. I’ll add a note to the post about this.

All cameras tested have different design points, such as having different optimal resolutions and distance of operation as well as depth settings.

Specific to the D415 you should make sure to operate at 1280×720 at about 45-50cm away. You should probably see if you can reduce the depth unit from 1000 (1mm) to 100 (100um). And possible reduce laser power. If you reduce the resolution to 640×480 you will reduce the depth resolution by 2x.

For scanning you might want to use the “high accuracy” depth setting. If the app does not allow it, you can run the RealSense viewer first, select the settings, and then run the scanning app. If you don’t power down or unplug the usb cable then the new settings should persist.

Also regarding the background noise I might suggest using a textured background instead of a white background.

For the D435 you will want to be closer, like 20-25cn away.

Thanks for the tips, Anders. The app doesn’t seem to have these features but I will give this the RealSense viewer workaround a try ASAP and update the post if results improve.

I did all that with the d415 and the results are excellent now. it really makes the difference.

https://skfb.ly/6AMGJ

This is a D415 scan with my own profil for 20cm range scan.

Hi Guillermo,

could you give me the settings detail to get this result at 20 cm?

Thanks

Hi Yary , you need to play with these parameters:

In the intel realsense viewer;

Stereo Module->Avanced controls->depth table

– Depth Units has to be 100 for max resolution

-with depth clamp max you define the range of the camera. for example fronm min to 20cm far away max (units are not cm).

-disparity shift: this is the most importan setting. for example if you put 319 there the camera sees betwen 10-15cm and all the resolution is in 5cm so its difficult to scan an object if you go futher or closer the camera dosnt see the objectbut you will get amazing resolution.

So the total depth resolution is the same if you work with 10m range or 20cm range. if you concentrate all this resolution in 20cm you will see the change.

if you play with these parameters you can get better results than with the default settings.

a test with a tedybear:

https://sketchfab.com/models/7cd815e472a24c0fbb5c2e67267aac95

none of them are worth a shit as far as i can tell

I honestly get sad from reviews with hopes to find that one good product soon can stop wasting money and scan on a budget. I’m considering just holding off and buying the hp z next month when I can splurge the extra money. I need to be able to replicate products so I’m not in the position to experiment anymore. I have an f200, Kinect, and about to finish a customized raspberry pi build using 4 cross line lasers and stereo HD color cameras. I may use googles structured light project and try my projector with the cameras and see what results we get. The thing about intel is the now have the nuc with D415 built inside using their sdk. I cannot find a single review on that or their small pi like aeonn board with D415. I have a nice white back drop that sits behind the automated turn table so I don’t know if something like that would help the noise factor or perhaps a green screen. I thought a green screen wouldn’t cause noise. I have one here I haven’t put to use yet. If you could get intel to have you try those two integrated systems with their sdk that would be great and perhaps why results are not well with 3rd party software or the need for a backdrop may be necessary with them. I’m torn with D415 or D435 but it appears the 415 is the normal goto. I also don’t understand why better cameras are not used. These products don’t cost intel more than $30 to manufacture by all means they have room to improve.

In addition to more research I have found that Intel 400 series work best on high powered desktops with upgraded GPU’s supporting Cuda. I have since purchased a D415 and 435 and this is confirmed that you need a solid PC. I noticed you did not compare the Razer Stargazer which is a known SR300 that has had quite a lot of development put into it. I would like to see you explore some of these other technologies and hear the results as well updated attempt with the d400 series sensors.

“Note: All models were captured with RecFusion’s Volume Resolution set to 512×512 which translate to a “voxel size” of 2mm, meaning it won’t capture details smaller than that. Theoretically these sensors have a higher depth resolution than that at close range (the resolution is distance-dependent). For instance, Intel states that the D400 series is less than 1% of the distance, meaning ~1mm at 1m.”

Wouldn’t 1% of 1m correspond to 1cm depth resolution? That seems quite a lot on the other hand. Do you have any more information on the depth resolution I could expect from the SR300/SR305?