This review of the Skanect 3D scanning software has been a long time coming and is one of the most-requested ones, too. One of the first 3D scanners I reviewed was the Structure Sensor. I was planning to make a review trilogy of that, starting with testing it with the free (sample) apps by manufacturer Occipital. The second episode was a review of the excellent itSeez3D software.

I actually started testing Skanect right after that, but although I liked the overall workflow I was disappointed by the texture quality. After some research, I discovered that a new version — 1.9 — was being developed and would fix that very problem, among other improvements. So I postponed the review and started beta-testing the new version.

Skanect 1.9 has been in development for over half a year. Let’s find out if it’s was worth waiting for!

Sensor Compatibility & Pricing

Many people take their first steps into 3D scanning by using a depth sensor like the $379 Structure Sensor. Mainly because it’s affordable and they already own a compatible iPad. Currently even more affordable is the discontinued but greatly discounted (as low as $75) and similar iSense. I tested the one I got on eBay with Skanect 1.9 and had no problems but all tests in this review are performed with a Structure Sensor and the latest 2.0 firmware.

So Structure Sensor users are naturally seeking affordable software, but there lies a problem I hear often (as in almost daily): there isn’t much choice. The free sample app works well, but is limited in functionality and scan quality. And while itSeez3D delivers a completely automated workflow and outstanding 3D scans, their current business model requires a combination of a monthly subscription and a per-scan fee if you want to do anything with the scans beyond sharing them online.

Skanect has a free version but that can’t be used for commercial purposes and is limited to 5000 polygons, which makes it impossible to export anything useful. Luckily the Pro version is very affordable at $129 / €119 (plus VAT). If you don’t have a Structure Sensor you can also buy it bundled with Skanect for $499.

Although I’m testing Skanect with a Structure Sensor, you can also use it with Asus Xtion or PrimeSense Carmine sensors. And Microsoft Kinect 360 (not the XBOX One / V2 version, since that one isn’t very good for 3D scanning).

I’m using an iPad to stream the data from the Structure Sensor to a PC over WiFi, but you can also use the $39 USB Hacker Cable to connect it directly to a computer. Be aware that you can’t capture color textures that way, since the Structure Sensor has no RGB camera.

How Skanect Works and whats new in 1.9

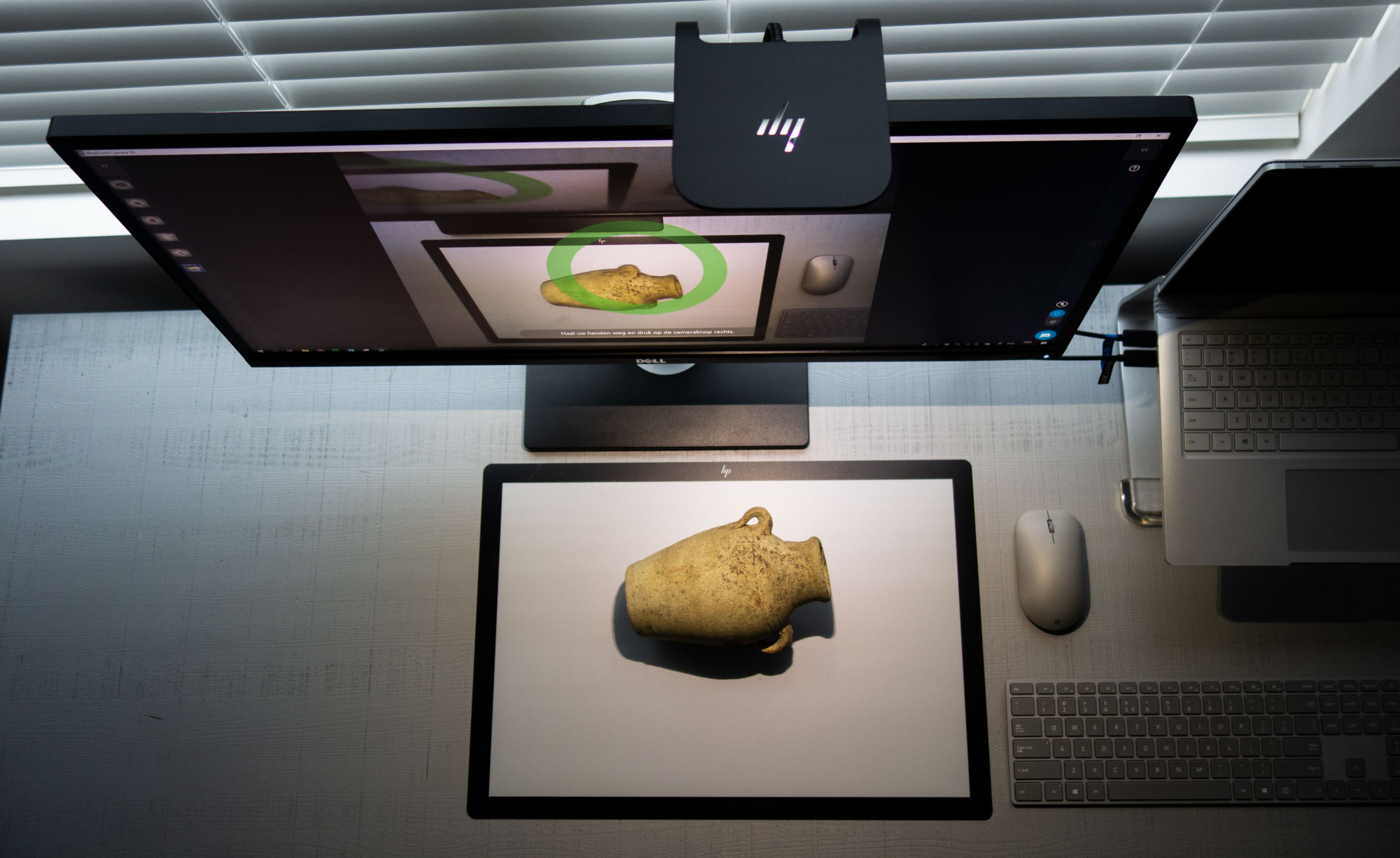

Skanect is not a mobile app. It works in a different way than other apps I’ve tested so far. Instead of fusing scan data into a polygonal model in real time on the iPad itself like the Occipital Scanner App or in the Cloud like itSeez3D, Skanect runs ons a PC or Mac and performs the fusion there.

[column lg=”6″ md=”12″ sm=”12″ xs=”12″ ]

So the iPad is basically just a camera. When Skanect is running on your Mac or PC on the same network as the iPad, the Structure iOS App will detect it and offer an Uplink mode. This allows the app to stream the depth and color data to the external computer where the real time fusion is performed. A (Predator-ish) preview of this is send back to the iPad screen so you can see what you’re doing.

[column lg=”6″ md=”12″ sm=”12″ xs=”12″ ]

The wait is almost over: After months of development I’m now reviewing the last Skanect 1.9 beta.#3Dscanning with @Structure Sensor & iPad pic.twitter.com/ONQeSUoQla

— 3D Scan Expert (@3DScanExpert) April 3, 2017

There are two important factors to make this setup work smoothly. The first is a fast WiFi network that isn’t being used for other bandwidth intensive tasks. Preferably a direct computer-to-computer (adhoc) network but I had no problems using our studio’s 5G WiFi.

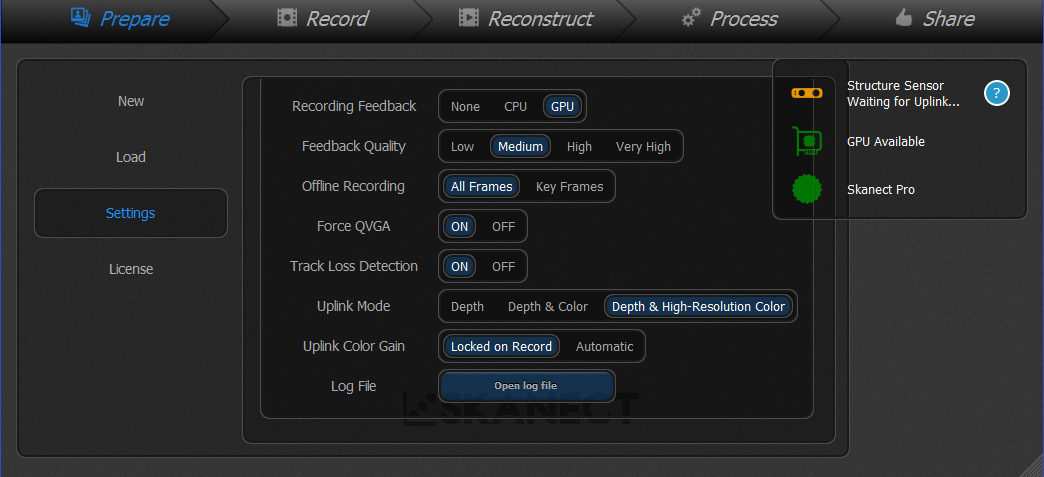

The second factor is a good Nvdia graphics card. Skanect also offers a CPU mode that works on the processor but I experienced a much smoother experience in GPU mode. My Windows PC has a Nvdia GTX 1070 with 8GB of RAM. With version 1.9, Skanect specifically supports CUDA 8 and the latest Titan-based GPUs so it’s great to take full advantage of that. The main benefit of a fast graphics card is that this allows you to set the Feedback Quality to Very High and the real time fusion is performed at this quality too, saving time.

My 2012 Macbook Pro with GTX 650M (be aware that the latest Apple computers all have ATI GPUs incompatible with Skanect) also worked well after installing the CUDA drivers. But only in Medium Feedback Quality. This means I had to re-process the scan data (on the CPU) to get the most details out of the scan data. More about this later.

Aside from CUDA 8 support, the biggest new feature of Skanect 1.9 is the High Resolution Color Uplink mode. In earlier versions, the Structure iOS app would simply stream the high-FPS depth and color data to the computer. Now, it will also take high-resolution photos once in a while for better texture quality. It will only do this if you move very slowly to prevent motion blur but the iPad app prompts you to do so if you’re moving too fast.

The Skanect Worklow

Skanect is not just a 3D scanning application. It offers a complete workflow from capture to export and has quite a complete suite of mesh editing tools. The workflow steps are organized in different tabs on the top of the interface. Let me walk you through them.

After selecting the Settings you want, it’s important to define the size of the scan area. There are a few presents but you can also drag the slider up to an area of 12 x 12 x 12 meters (if your PC can handle that amount of data, I guess). It’s also wise to give your scan a sensible name at this point.

After selecting the Uplink mode on the iPad, you can start and stop recording from there. Tracking is very good when scanning objects or people. Room scanning is a bit trickier because walls usually have very little trackable data. More about that later.

After scanning the software needs a minute to organize all scan data. It’s noteworthy that after it says it’s done it can take another minute for the mesh to appear. According to the changelog this is because of the new CUDA-based fusion method but it would have been nice if the interface would show that it’s still working.

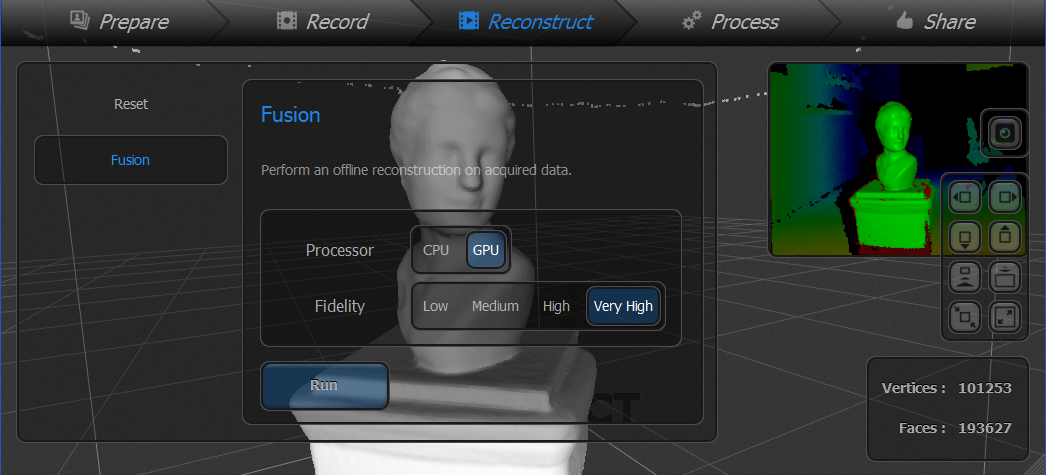

Whether or not you have a fast GPU capable of running the recording in Very High mode, you can re-process the raw scan data in the Reconstruct tab. This can also be done on the CPU.

Regardless of what you’re scanning, I advise to either scan at the Very High setting or re-process the data this way to get the most out of the scan data. In some cases this might add some unwanted noise in the geometry but you can smooth that in the next step. It’s noteworthy that while reconstruction worked fine on my object and person scans, it made a mess of most room scans I tried.

The Processing tab has all kinds of editing features and many offer a lot more control than competing software. I won’t go into every feature but here are some of the most important ones.

The menu on the left is split into different categories. The first, Mesh, is actually very handy because it let’s you reset the mesh to its after-scanning state in case you don’t like the edits you made. The Watertight function is kind of Skanect one-click feature for smoothing, hole-filling and colorization and produces good results in most cases. External Edit is helpful if you want to use an external editing program (that supports .PLY format).

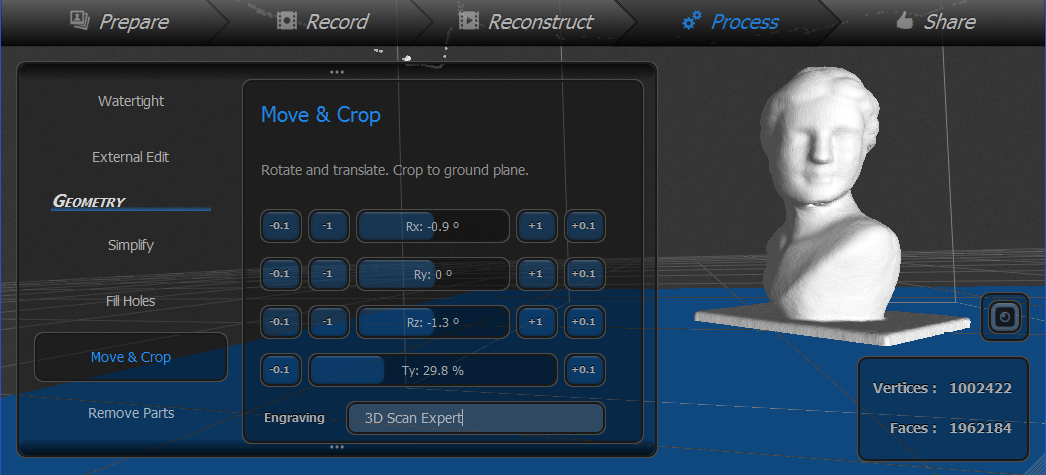

The Geometry section allows you to Simpify (decimate) the model and perform hole-filling operations. The latter is quite comprehensive because it also supports non-watertight methods. Move & Crop (pictured below) not only allows fine control over rotation and floor-level cropping but can also engrave the floor surface with a text. This can be handy for branding or copyrighting models.

Remove Parts allows you quickly delete orphan geometry based on a percentage-based ratio from the main model. It works surprisingly fast!

Notably missing from Skanects mesh-editing toolbox is a hollowing feature. Making models hollow with a thin wall and escape holes is something many professionals do to save on 3D printing costs with SLS technology used for full color sandstone prints.

Color unsurprisingly let’s you apply color to the model. If you chose the High Resolution color setting, Skanect will use the separate photos as much as possible and fill in the remains with the lower-resolution color if necessary.

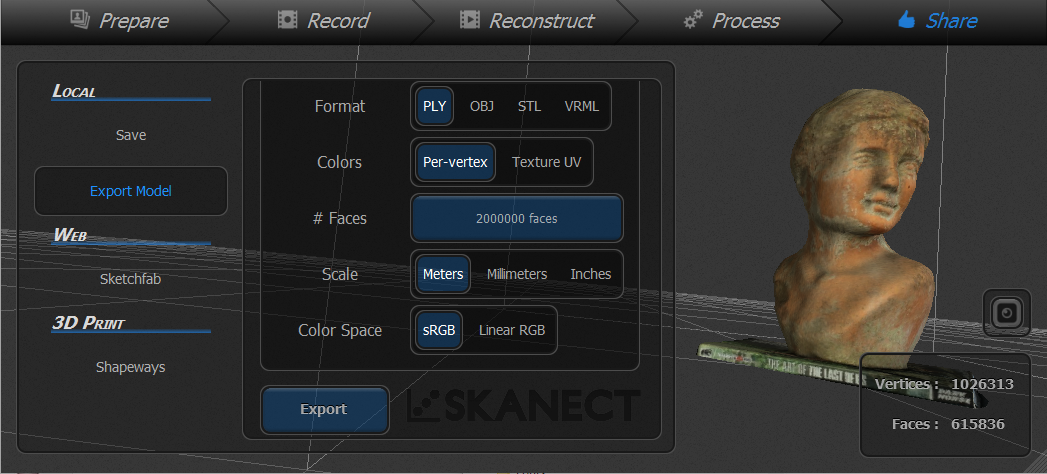

Skanect uses per-vertex coloring to apply color to the mesh. This means that the quality of the texture will depend on the polycount of the mesh. As you can see below, setting a higher resolution for the colorize feature greatly increases the quality but also increases the amount of polygons (or faces) tenfold. The polycount seems to be capped at 2 million, but that is way to much for most (real time) 3D purposes.

The Prioritize First Frame feature is nice, because it ensures that the front of objects or the face of a person is made up from a single texture instead of multiple blended parts that can look blurry.

The Share tab contains all saving, exporting and sharing functions. Exporting can be done to .PLY .OBJ .STL and .VRML. You can opt for Skanect’s native per-vertex coloring or let the software generate a UV texture map.

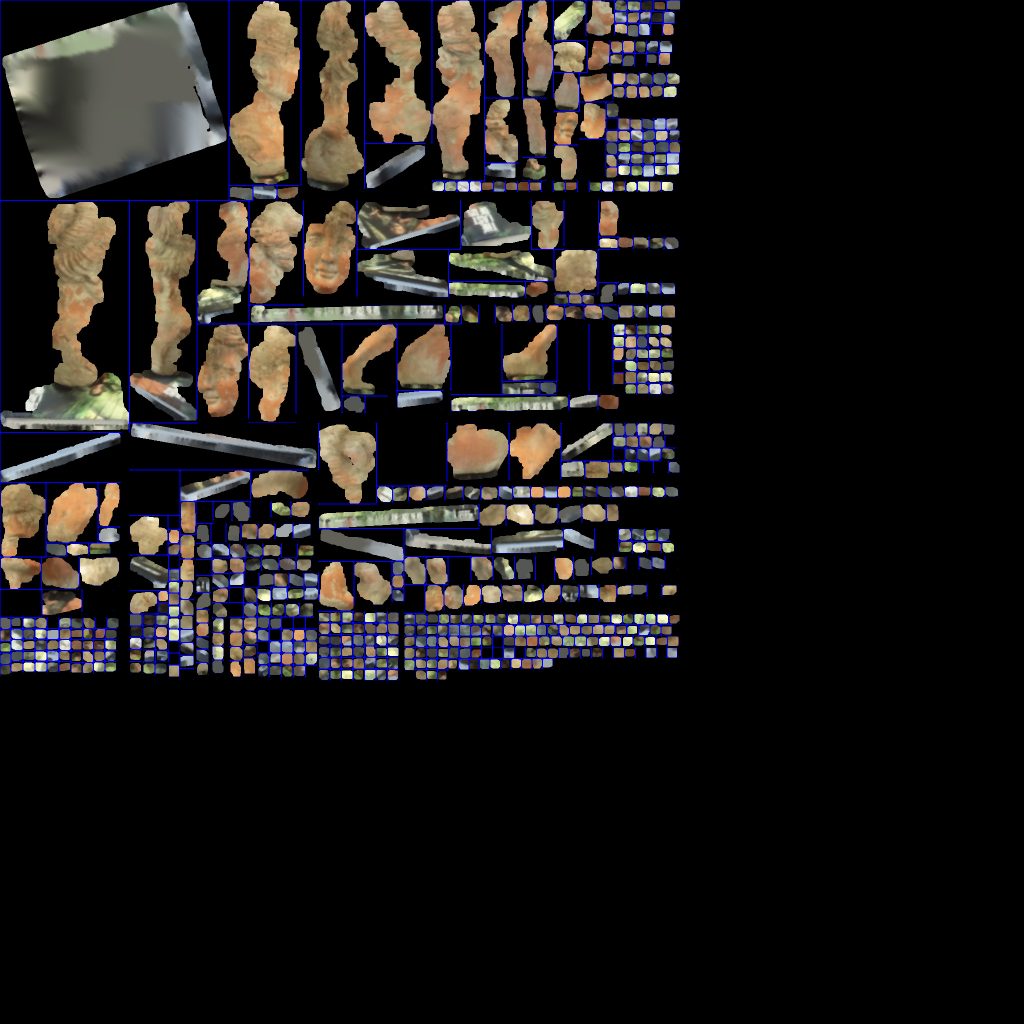

Since Skanect can decimate geometry on export it should be possible to create a low-poly mesh with high-quality texture. Unfortunately it seems impossible to retain the 1mm per-vertex color resolution when generating a UV texture. Depening on the object size and settings, Skanect will either export a 2K or a 4K texture but the island mapping is very inefficient:

With this amount of unused space, it’s no surprise that UV-textured exports have lower texture quality than vertex-colored ones. I included some examples of this below. There’s clearly room for improvement in the texture mapping department for Skanect 2.0.

It’s great that Skanect allows direct export to Sketchfab for online sharing and Shapeways for 3D printing. Almost all examples below are uploaded directly from the software which saved me a lot of time. Skanect uses per-vertex coloring and doesn’t decimate the model before uploading it to Sketchfab. This gives the best-possible output, but as most models have the maximum polycount of 2 million they aren’t very web or mobile — and certainly not VR — friendly. It would be great to have more control over this.

3D Scan Results

Let’s start with the bust from the screenshots. Here’s the result fused at the Very High setting, captured with the High-Resolution Color Uplink mode and colorized at 1mm resolution:

http://sketchfab.com/models/14828370801c43c08120de15150ae61d

For comparisson, here’s the same object without High-Resolution Color. So that is what it would have looked like in previous versions of Skanect.

If you zoom in on the face of the bust it’s clear that the new texturing method offers a significant quality improvement. And because I know many of you want to make the comparison, here’s the result from itSeez3D (captured earlier but with the same light kit):

It’s clear that itSeez3D delivers better texture quality but with version 1.9 Skanect is starting to get quite close. The biggest difference is that the itSeez3D bust is a relatively light 50k poly model with a high-res texture while the Skanect model has 750k polygons. It might have captured a bit more geometric detail but most of the polygons contain geometric noise.

The bust above is 40cm tall which could be considered the lower end of the scan size range for the Structure Sensor. So let’s see what a human bust looks like with Skanect 1.9:

First, let me show you what difference the Feedback / Fidelity settings make for the final result. I used the same scan data of my business partner Patrick. The first embed below is processed at Medium Fidelity and the second one at Very High:

Quite a difference, especially for his face. So that really worth either performing an offline reconstruction or getting a faster graphics card with more memory (Very High needs at least 2GB of VRAM) for.

If you put the last embed above in MatCap render mode, you again see that there’s quite a bit of geometric noise from the Very High Fidelity fusion but there’s enough geometric detail for small-scale 3D printing. With the texture applied, I’m quite surprised and happy with the result — this would not have been possible with earlier versions of Skanect.

To illustrate the part from the Exporting section below about the reduced quality when exporting the vertex-colored native model to a decimated OBJ with vertex-map, here’s that result:

It’s hard to totally quantify, but the polycount has been reduced from 2M to about 1M. But the color quality also degraded (check the details in Patrick’s beard). It’s not extreme or anything but noteworthy for a texture-centered update in my opinion.

Comparison with the other apps

For comparison, here’s Patrick scanned (at different moments, but comparable conditions) with the Occipital Scanner Sample App (top) and itSeez3D (bottom):

As you can see, the result of Skanect is much better than that from Scanner app. But itSeez3D still beats Skanect for textures. Although the difference got a lot smaller with Skanect 1.9.

But why is Skanect still a bit behind?

I don’t know all the technical details (and feel free to correct me) but I do know that when scanning with itSeez3D the texture photos are taken at full resolution and can also be used for texturing at this resolution if you tick that setting in the app.

Skanect 1.9 now also captures photos while scanning but only at 1.2 megapixel resolution while the RGB camera on my iPad mini 2 has a resolution of 5 megapixels. So a lot of small details get lost there. I guess this has to do with the fact that all data has to be send to the computer wirelessly in real-time. With the current resolution the capturing is already paused for a very short moment when a photo is made so it’s possibly a trade-off between quality and user experience.

[well type=””]

Skanect vs. itSeez3D (for Full Color 3D Printing)

I’m aware that many readers of this post are wondering if the Skanect 1.9 update brings the (texture) quality in line with itSeez3D. Especially for 3D scanning people to 3D print mini figurines. Apparently many people are looking into starting a business in that direction.

To keep this post focussed on Skanect, I’ve made a separate post about the Skanect vs. itSeez3D topic.

[tw-button size=”large” background=”” color=”” target=”_self” link=”https://www.3dmag.com/reviews/3d-scanning-skanect-vs-itseez3d-full-color-3d-printing/”]Read my Skanect vs. itSeez3D Comparison[/tw-button]

[/well]

Room Scanning

Another question I often get is: Can I use the Structure Sensor to scan interiors?

As with almost all 3D-scanning-related questions, the answer depends on the purpose. Simply put: if you want to use a sensor to efficiently take a lot of measurements in an interior space it can be a really helpful tool. Occipital even has a dedicated app for that called Canvas.

If you’re aiming for more aesthetic presentations like real estate showcasing, depth sensor-based 3D scanning isn’t there yet. The results simply aren’t eye-pleasing enough for commercial use and most interiors contain a complex combination of tricky shapes and surfaces to get a complete result. 3D scanning still has a long way to go to compete with 360-degrees photography in this field. The way Matterport combines those two technologies is the only best-of-both-worlds approach I know currently exists.

To put 3D Room Scanning with the Structure Sensor into perspective (some pun intended) here’s a scan I made with the lightweight and easy-to-use (and free) Room Capture iOS app for Structure Sensor.

And here’s the result I made with Skanect at Very High Fidelity:

The door in the back and other parts might look a lot better because of the better textures and there might be a bit more geometric detail here and there, but overall it’s still like a nuke has gone off in the studio. I can’t think of a (professional) purpose for this beyond measurements.

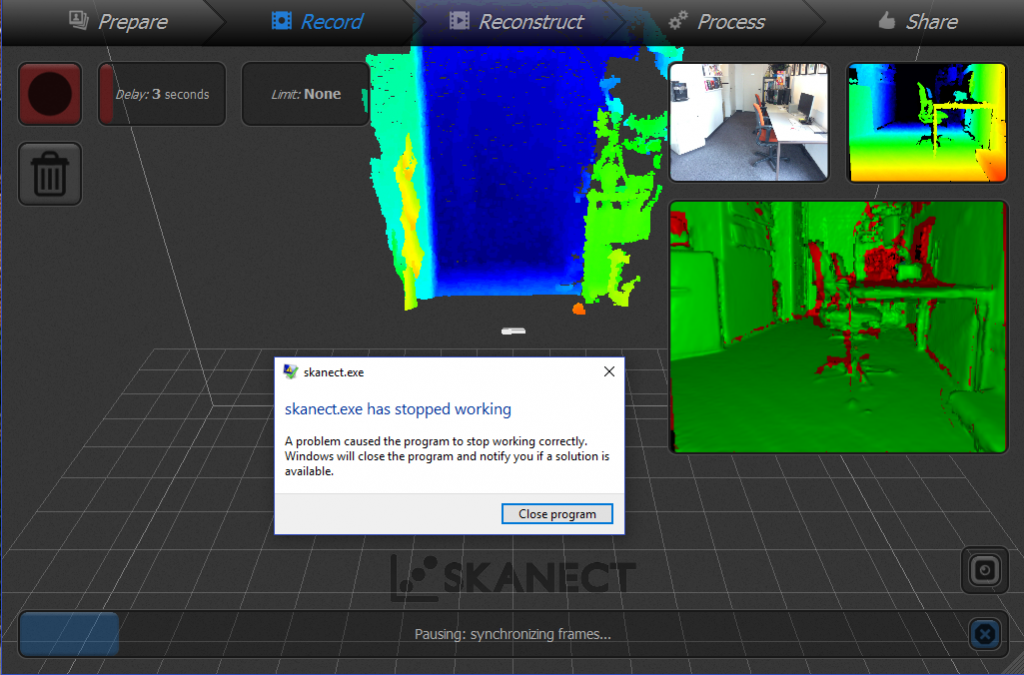

Quality aside, scanning a room with Skanect was quite a lot more work because the application crashed constantly during post-scan processing (“synchronizing frames”) with loss of all data. This happend with Medium Feedback Quality as well but only with “big” scans like this room, not with objects or people.

Verdict

Skanect 1.9 is an improvement in many ways, most notably texture quality and support for the latest Nvidia graphics cards. But the former is still behind the texture quality of itSeez3D and the latter requires an additional hardware investment to take full advantage of the workflow.

Although Skanect can now also capture photos while scanning, they’re still not at full resolution. The color quality of version 1.9 is more suitable for small-scale full-color 3D printing than previous versions but for screen-based purposes it’s still not on par with the competition. And with its native per-vertex-based coloring technique and inefficient UV texture mapping algorithms it’s impossible to export a low-poly model with high resolution textures.

Skanect does offer more versatility than purely iPad-based apps. It allows you to set a scan area up to 12 x 12 meters and scan as much as your PC can handle. Unfortunately, Skanect crashed often (during post-scan processing with loss of all data) when trying to scan our small studio on my brand new PC with 32GB of RAM and an Nvidia GTX 1070 with 8GB of VRAM and all latest drivers. Luckily it was 100% stable when scanning objects and people.

For $129 Skanect is very affordable. For that you get an application that offers a complete 3D scanning workflow from capture to export. The editing features are comprehensive and work well and fast — even on large meshes. But since the polycount is capped at 2 million it’s impossible to push the software to my hardware’s limits.

If you have a Structure Sensor of iSense and are considering to buy Skanect you can always try the free version first and see how it performs on your system. It’s fully functional internally, just capped at 5000 polygons for exporting.

I’m looking forward to version 2.0, although I’m unsure if that will be a free update for 1.x users.

(This post has been edited after publishing to move move comparison with itSeez3D to a separate post to expand that part and keep the review focused on Skanect 1.9.)

Would like to see review for KScan3D free 3D scanning software for Kinect and Asus sensors. Als o lookinf forward to hear more about latest Intel realsense ZR300 camera.

Hi Anil,

I did a short test of KScan3D and didn’t like it because it has no realtime fusion. In my opinion that’s a necessity for sensor-based scanning these days.

Intel isn’t really targeting 3D scanning with the RealSense ZR300 since it’s currently not compatible with the main RealSense SDK for Windows 10. The ZR300 only works on Linux and through Intel Joule. I guess it’s more of a tacking sensor for IoT applications.

Hello Nick,

I liked the results you got from skanect – the Patrick’s bust. What was your configuration on Process tab for that specific scan? The Watertight function – kind of Skanect one-click feature for smoothing, hole-filling and colorization or Fill Holes – geometry menu? Did you use the colorize option – under color menu? Did you enable the prioritize First Frame? Because I haven’t had good results with prioritize First Frame option enabled, the face texture doesn’t align very well.

Thank you.

Hi Bruno,

I never used the Watertight function but instead used the separate functions. I think I had all settings on default. I didn’t use smoothing. I do remember it’s best to make a scan without holes because the hole-filling algorithms changed the geometry somehow. I used the colorize function from the colorize menu and I did use the first frame priority.

But the first thing I always ask in case of bad Structure Sensor scans: do you use a light setup? If not, you will probably never get the results I’m getting. At least not the color quality. And this was done on the cheapest iPad mini 2 model.

I’m starting to try to get into the world of 3d scanner but as there is so much supply I’m a bit lost, as my budget is limited to project start, I can not understand out which would be the best 3d scanner to scan a motorcycle to edit in blender without lose the detail of the object and the texture is not the most important. If you manage to orient myself at this point of the project would be quite important to start.

Great review Nick. I paid for the Pro version of Skanect more than a year ago and was using Kinect to scan my nephews and nieces. The purpose of scanning was to 3D print their busts. Surprisingly the busts printed quite nicely — not color, but the resolution was decent.

It has been about six months since your review, so I’m wondering if any of your opinions have changed. I’m considering purchasing a Structure Sensor to use with latest iPad Pro and wanted your feedback. Given it’s nearly Nov 2017, is there anything better out there in this price range (or less than $1,500)?

Thanks in advance!

Nick, thanks very much for this informative article. I’m relatively new to 3D scanning. My idea is to scan students (bust only) and have a fundraiser for my school. I need an affordable solution with easy scanning with above average resolution quality. Is there an option for me?

Hi,

What software suite/app would you recommend in a scenario where you need to scan a patient’s knee to manufacture a product for them?

thanks

Hey there,

Thanks for the review! Trying to decide which software to get, and the higher geometric resolution of Skanect (vs itSeez) is important, as I’ll be using them as base meshes for high poly sculpting…. but, I’ve got an AMD card. I understand I won’t be able to use the GPU option to record in “very high”, but will I still be able to reconstruct at very high quality on the CPU (again, my main concern is getting as much geometric resolution/detail as possible)?

Thanks in advance.

hello Nick,

I’ve got a ISense and desesperate to find a way to have it working because iOS app is not available … I read all your articles but still lost in space.

Thanks for your help to have my scanner functionnal again !

Hallo, ik heb een probleem met scanner{structure SDK op mijn Ipad6} met de uplink. als ik die aanzet wordt hij groen dus actief maar als ik een scan maak met de ipad gaat hij gelijk weer op geel, dus inactief. Heb ik eerder nooit gehad. wat zou ik hier aan kunnen doen?